This is the first in a series of short posts about ChatGPT based on my experience as this tool becomes more and more a part of my daily toolset. My comments are not highly original, I’m just raising awareness of issues.

ChatGPT can sound very human, and yet it’s behaviour is very unlike a human in many ways. So we need to try to map its behaviour using familiar terms. First up: it lies.

ChatGPT occasionally lies. Truly. It fabricated a reference entirely when I was looking up Penrose and Hameroff. When I called it on it, it apologized, but refused to explain itself, although it said it would not do so anymore in the future (after I told it not to). WTF? Here is the false reference: Penrose, R., & Hameroff, S. (2011). Consciousness and the universe: Quantum physics, evolution, brain and mind. Cosmology, Evolution, and the Mind, 61-74.

— Dr Jordan B Peterson (@jordanbpeterson) April 19, 2023

The only thing shocking about Peterson’s tweet here is that he was apparently shocked by ChatGPT’s behaviour. ChatGPT is a Large Language Model, which means it’s designed to capture many things about how human language works, English specifically. It excels at the task of “carry on the current sentence in a plausible way”, in other words — it is designed to make things up.

Some people refer to this as ChatGPT “hallucinating”, which seems a little too generous. It is, by design, in a permanent state of dreaming, and its dreams happen to contain elements of truth, so saying “it hallucinated” is kind of misleading.

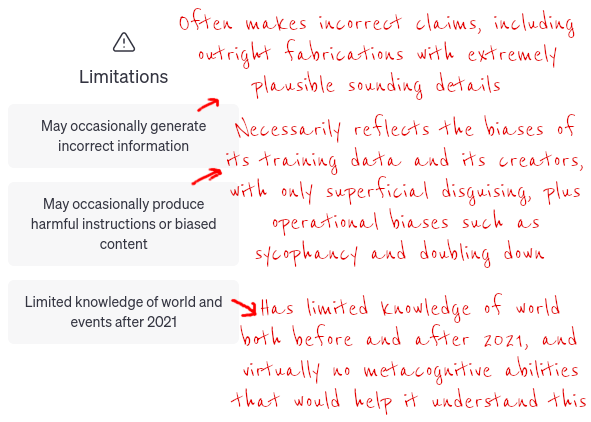

I think we can explain Peterson’s surprise given the extremely weak disclaimer that OpenAI have put on their product. This is my improved version:

I’m not saying that you should think of ChatGPT’s capabilities as only “guessing the next word” – it’s clear that it can do far more than that. But you should not assume its default state is truthfulness.

Given its starting point, ChatGPT actually does surprisingly well at telling the truth most of the time, but it still does lie an awful lot, and often when you are least suspecting it, and always with complete confidence, with great panache and with not the smallest blush.

For example, I’m currently often using it to help me write Elisp code, and it will very often invent functions and variables that simply do not exist, or claim that packages do things that they just don’t. It’s very rare that the things it makes up stick out as being false – when it makes up a function, the name and description are exactly what you would expect. Often the invented functions are what I wish did exist, but in fact don’t. When I’m asking ChatGPT for solutions it will happily invent just what I want to hear. For example:

In Emacs with the abbrevs feature, is there a way to change which characters Emacs allows to be used as part of the abbreviation?

Yes, you can change the regular expression that Emacs uses to determine which characters are allowed as part of an abbreviation. By default, Emacs considers only alphabetic characters and digits to be valid abbreviation characters.

To change the default behavior, you can customize the abbrev-char-regexp variable. Here is how to do it:

Open your

.emacsconfiguration file by typingC-x C-f ~/.emacsin Emacs.-

Add the following line to your configuration file:

Save and close the file.

In this example, the regular expression \\sw\\|\\s_ specifies that any character that is considered a word constituent (\\sw) or an underscore (\\s_) can be used as part of an abbreviation.

You can customize the regular expression as needed to allow other characters in abbreviations. Just remember that changing the regular expression can have unintended consequences, so it is a good idea to test your abbreviations thoroughly after making any changes.

abbrev-char-regexp is completely made up, but very convincingly named and described, and everything else makes sense.

Even when you know this, its extremely easy to get caught out. Humans don’t usually lie for no reason at all, so we are not trained at being suspicious of everything continually – you just can’t live like that. You reduce your levels of suspicious when people would have no motive to lie. That won’t work with ChatGPT.

Therefore…

My point is not that ChatGPT is useless – like others I’m finding it very useful – but if you are going to use it, here are some hints about how to do so without hurting yourself or others:

Only use it in situations where reliability of output is not important. Surprisingly, there are quite a few situations where that is the case, but there are many where it is not. ChatGPT makes a terrible general purpose “research assistant”. It has a significant amount of general knowledge, but the flaw is that it will lie and lead you in random directions — or worse, biased directions. In the future, you’ll be unlikely to remember whether that “fact” you remember was one you read from a reputable source or just invented by ChatGPT.

Ideally, you should use ChatGPT only when the nature of the situation forces you to verify the truthfulness of what you’ve been told. Or, where truthfulness doesn’t matter at all.

For example, ChatGPT is pretty good at idea generation, because you are automatically going to be a filter for things that make sense.

The main use for me is problem solving, for certain kinds of problems.

Specifically, there are classes of problems where solutions can be hard to find but easy to verify, and this is often true in computer programming, because code is text that has the slightly unusual property of being “functional”. If the code “works” then it is “true”.

Well, sometimes. If I ask for code that draws a red triangle on a blue background, I can pretty easily tell whether it works or not, and if it is for a context that I don’t know well (e.g. a language or operating system or type of programming), ChatGPT can often get correct results massively faster than looking up docs, as it is able to synthesize code using vast knowledge of different systems.

There are still big dangers when doing this, however. It’s extremely easy to get code that appears to work, but has some small detail that causes a major bug. Simon Willison gave an example where the benchmark code ChatGPT wrote for him started the timer in the wrong place. But it still generated an attractive looking graph that looked correct, and wrote most of the code very well, making it extremely easy to skip the close review that is necessary.

Other things that you cannot easily verify include: whether the code actually works for more than the handful of inputs you test against; whether you have performance issues especially at scale; whether you have security problems.

Even when I know it lies, I can fail to verify individual claims, and then waste half an hour debugging something that could never have worked, because ChatGPT just invented a feature and I took it for granted that it existed. So beware!